It is fundamental for personal robots to reliably navigate to a specified goal. To study this task, PointGoal navigation has been introduced in simulated Embodied AI environments. Recent advances solve this PointGoal navigation task with near-perfect accuracy (99.6% success) in photo-realistically simulated environments, assuming noiseless egocentric vision, noiseless actuation and most importantly, perfect localization. However, under realistic noise models for visual sensors and actuation, and without access to a ''GPS and Compass sensor'', the 99.6%-success agents for PointGoal navigation only succeed with 0.3%. In this work, we demonstrate the surprising effectiveness of visual odometry for the task of PointGoal navigation in this realistic setting, i.e., with realistic noise models for perception and actuation and without access to GPS and Compass sensors. We show that integrating visual odometry techniques into navigation policies improves the state-of-the-art on the popular Habitat PointNav benchmark by a large margin, improving success from 64.5% to 71.7% while executing 6.4 times faster.

Results

Agent is asked to navigate from blue square to green square . Blue curve is the actual path the agent takes while red curve is based on the agent’s estimate of its location from the VO model by integrating over SE(2) estimation of each step. The larger ratio the red curve overlaps the blue curve, the more accurate the VO estimation will be.

Check how accurate our VO estimation can be even the agent gets stuck in the second and third video for a long time. Note that our VO module has never seen the house/scene in the video during training.

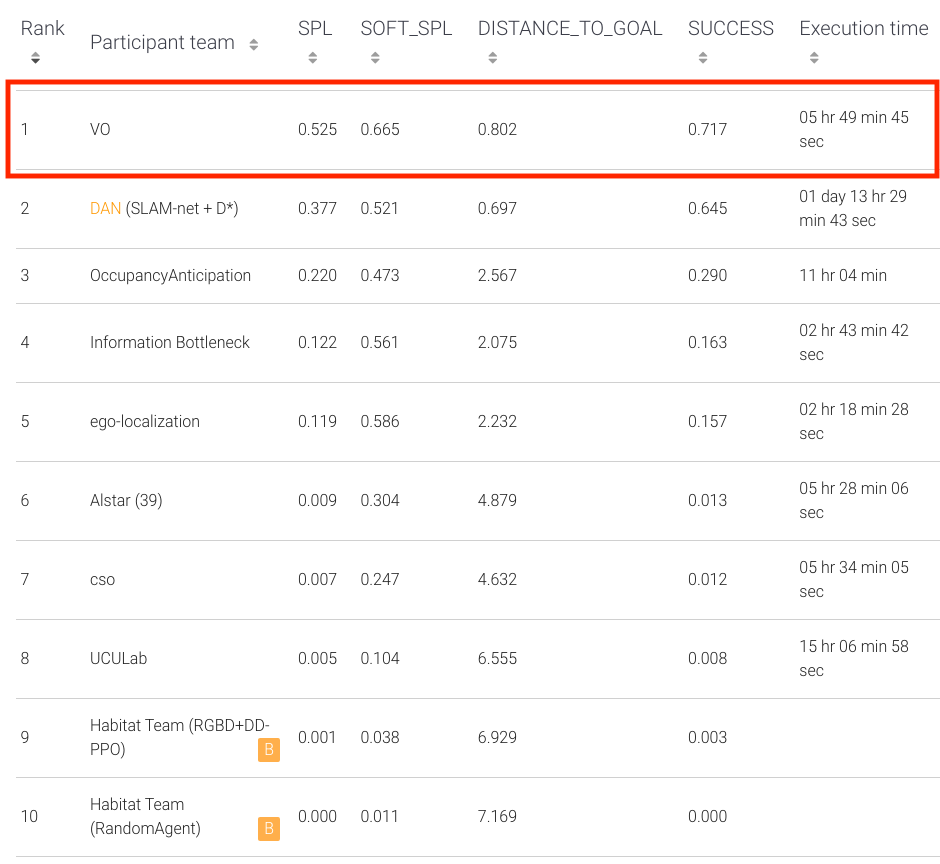

Online Leaderboard

Under settings of HabitatChalleng 2020, our approach improves success from 64.5% to 71.7% while executing 6.4 times faster.

Bibtex

@inproceedings{Zhao2021pointnav,

title={{The Surprising Effectiveness of Visual Odometry Techniques

for Embodied PointGoal Navigation}},

author={Xiaoming Zhao

and Harsh Agrawal

and Dhruv Batra

and Alexander Schwing},

booktitle={ICCV},

year={2021},

}

Acknowledgements

This work is supported in part by NSF under Grant #1718221, 2008387, 2045586, MRI #1725729, and NIFA 2020-67021-32799, UIUC, Samsung, Amazon, 3M, and Cisco Systems Inc. (Gift Award CG 1377144 thanks for access to Arcetri).